As applications and software become more and more distributed, their management becomes more challenging than ever. This complexity arises from the shift from monolithic applications, which are hosted on a single computer, to microservices and distributed services, which are hosted across multiple computers and require extensive networking. This poses the problem of managing each individual node in the network along with the applications running in them. In other words, it becomes difficult to manage the cluster of nodes in the network.

However, many solutions to this problem exist, and Kubernetes is one of them.

And in this blog post series, we will learn more about Kubernetes and how to use it. We will first get accustomed to the key concepts in it and then start to use it hands-on as we progress. With each post, you will become more proficient in Kubernetes as you follow along. Let’s dive in!

What is Kubernetes?

Kubernetes, commonly referred to as K8s, is an open-source system for automating the deployment, scaling, and management of containerized applications in a cluster.

What Does It Do?

At its core, Kubernetes provides a framework for running distributed systems resiliently by handling tasks such as:

- Node management

- Scheduling

- Failover for applications

- Distributing load

- Scaling based on demand

- Orchestrating storage systems

By grouping instances of applications into containers, Kubernetes enables faster, more efficient deployment and updates.

In simple terms, it allows developers and system administrators to run applications reliably, without constant manual intervention, making it an essential part of many DevOps environments.

Ultimately, Kubernetes aims to enable more agile and resilient software development and operations.

If you want to learn more about Kubernetes, you can check here.

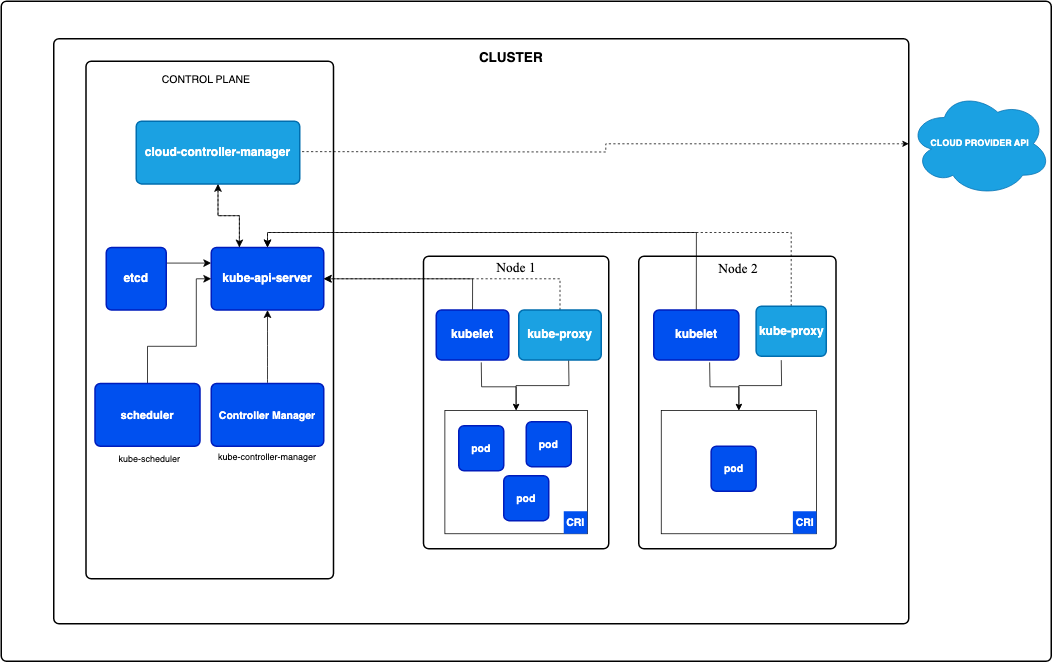

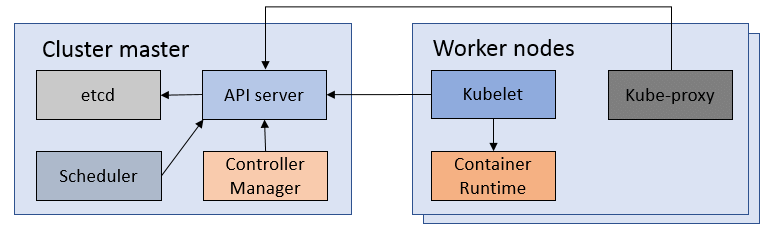

Kubernetes Architecture

A Kubernetes cluster contains of nodes. Each node represents a computing unit. You can think of these as actual/virtual computers. They can be:

- Physical machines

- Virtual machines

- Cloud environments

There are two types of nodes:

Master Node: This is where your Kubernetes cluster is managed. It maintains the cluster so that it is in sync with your cluster declaration that you provide as a yaml file. It manages applications/services and the containers in them. This node is also called the control plane of the cluster. It contains these components:

- API Server (kube-apiserver): The API that allows communication between K8s elements. For example, the control plane uses this API to manage nodes.

- Etcd Storage: Is a lightweight, distributed key-value store that's used to save the cluster state.

- Scheduler (kube-scheduler): Schedules the unscheduled Pods to run on specific Nodes based on resource availability.

- Controller Manager (kube-controller-manager): Regulates the Kubernetes cluster to the desired state.

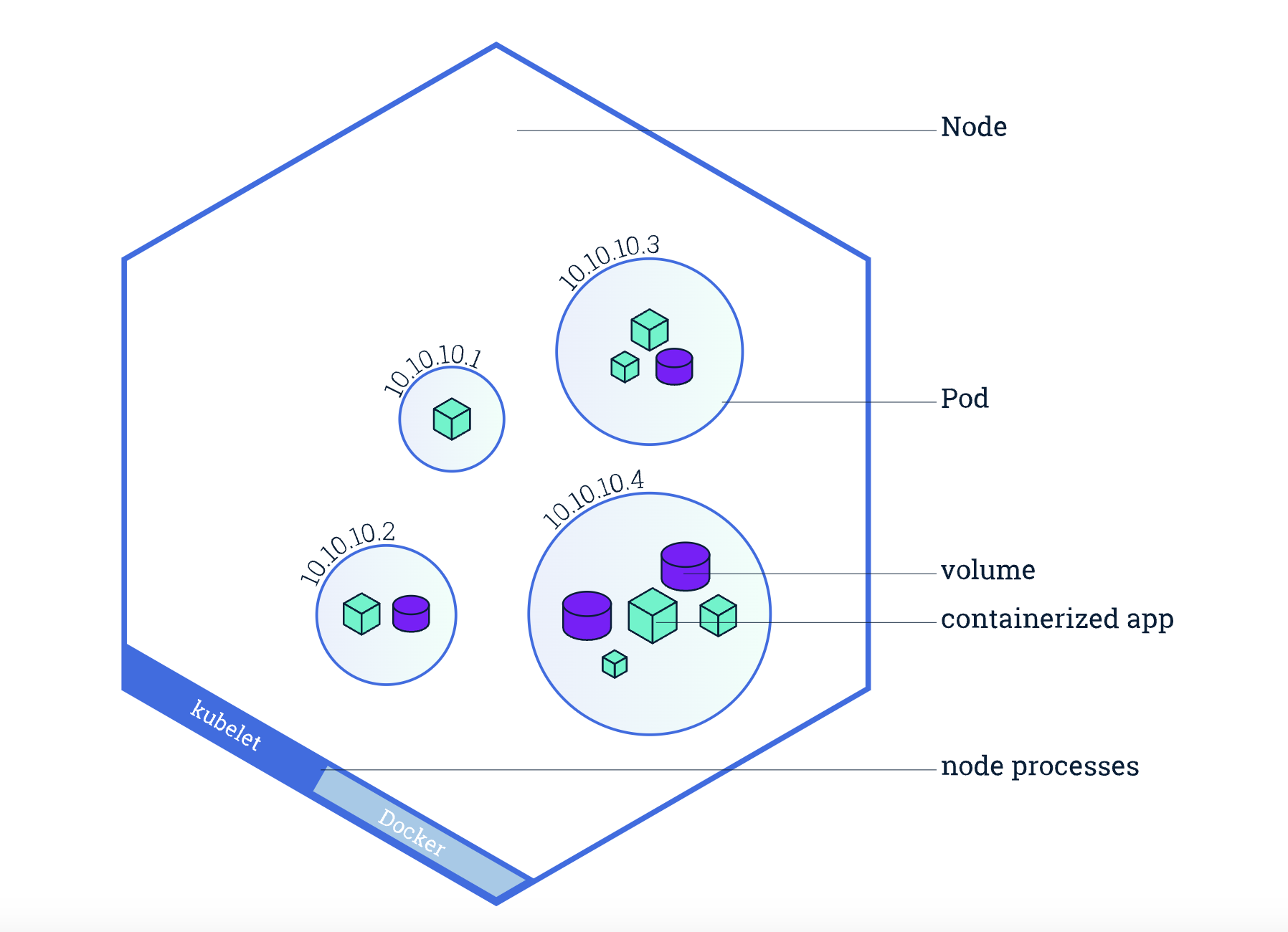

Worker Node: These are the nodes that run the applications. They contain these components:

Kubelet: A Kubelet is an agent that runs on each node in the cluster and ensures that containers are running in a Pod. It does not manage containers created outside of Kubernetes.

Pods: A Pod is the smallest and simplest unit that you create or deploy in Kubernetes. A Pod represents processes running on your cluster and encapsulates an application's container (or a group of tightly-coupled containers), storage resources, a unique network IP, and options that govern how the container(s) should run.

Container Runtime: This is the software responsible for running containers. They create an environment where the containerized applications can execute isolated from the rest of the system. Here are some examples of container runtimes:

Docker: Docker is the most popular container runtime, known for its ease of use and rich feature set. Docker containers are based on Docker images, which can be found on the Docker Hub, a repository of container images.

infoHowever, as of 2022, with v1.24, Kubernetes stopped supporting the Docker container runtime as it did not comply with the Container Runtime Interface (CRI)

containerd: containerd is an industry-standard core container runtime. Being a lower-level container runtime, containerd is designed to be embedded into a larger system, and it's a core component of Docker.

CRI-O: CRI-O is an implementation of the Kubernetes Container Runtime Interface (CRI) to enable using OCI (Open Container Initiative) compatible runtimes. It is a lightweight alternative to Docker, designed specifically for Kubernetes.

Kube-proxy: It handles network traffic for services on a single node by performing connection forwarding. Services are an easy way to route traffic to specific applications/Pods.

For more information about these, you can check here.

If you wish to create your own Kubernetes cluster for testing, you can make use of tools like kubeadm. Alternatively, for easier management, you can opt for cloud-based services such as EKS, AKS, or GKE. If you prefer to experiment with Kubernetes locally, lightweight distributions like MicroK8s, k0s, or Minikube are also available. If you wish to test it out without any installations, you can also check here.

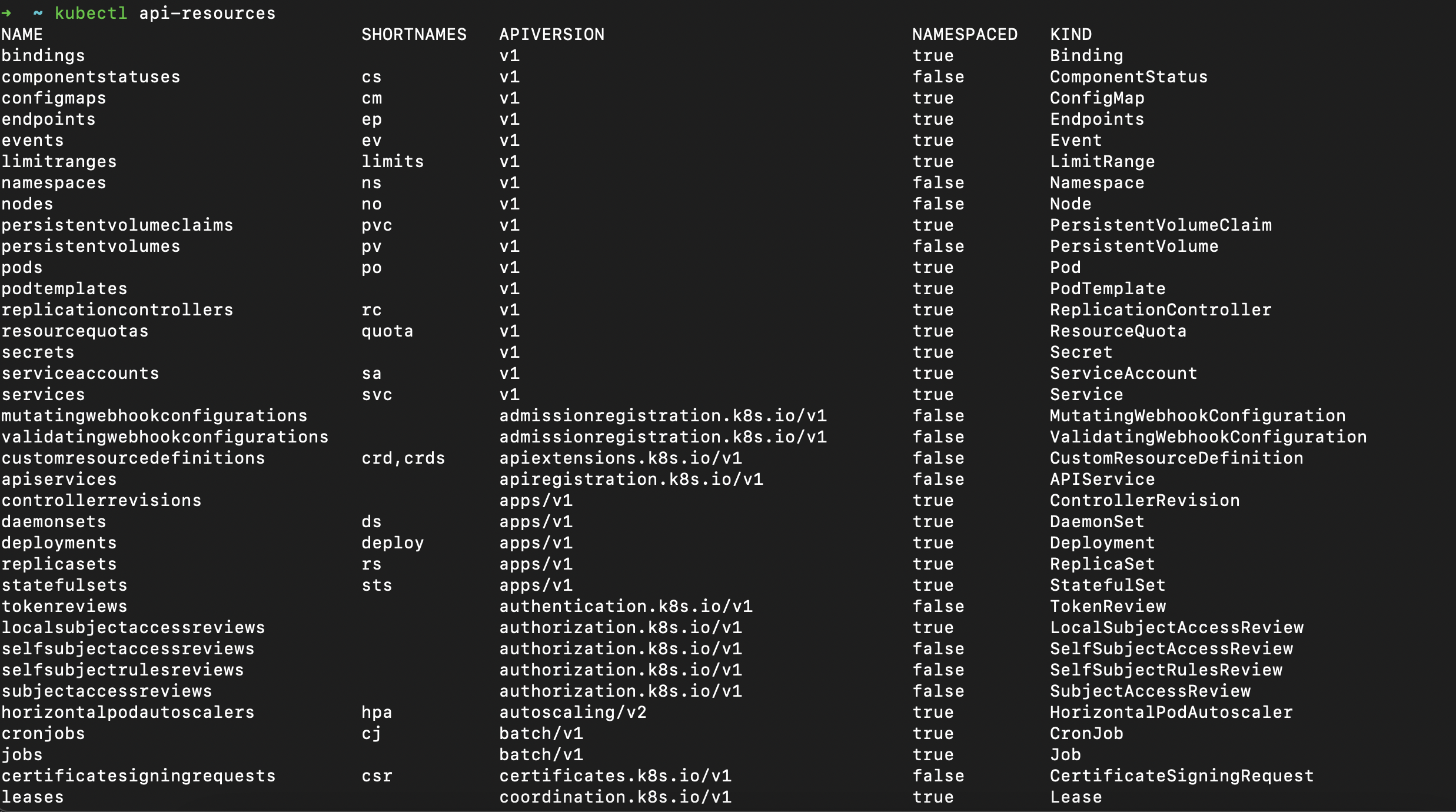

Kubernetes Objects

Kubernetes has many different types of objects which you can check yourself using the command

kubectl api-resources

These objects allow you to specify the desired state of your cluster alongside the applications running in it using a yaml file.

Here, we will cover a few of these objects that are the most crucial when you are getting started.

Pod

Pods are the smallest computing units that you can deploy. They generally provide a computing environment to either a single container or a group of tightly coupled containers. They have their own unique IP addresses but there are easier ways to access them using Services (explained below). They are generally not deployed manually, but instead managed by other objects such as ReplicaSets, DaemonSets etc.

DaemonSet

DaemonSets allow you to run the Pods you specify on every (worker) node by replicating them. If a new node is created while running a DaemonSet, the Pod will be initiated on that newly created node. If the DaemonSet is deleted, all the Pods associated with it will be deleted. They are useful if you want to execute a computation on every node regardless of the applications running in them.

ReplicaSet

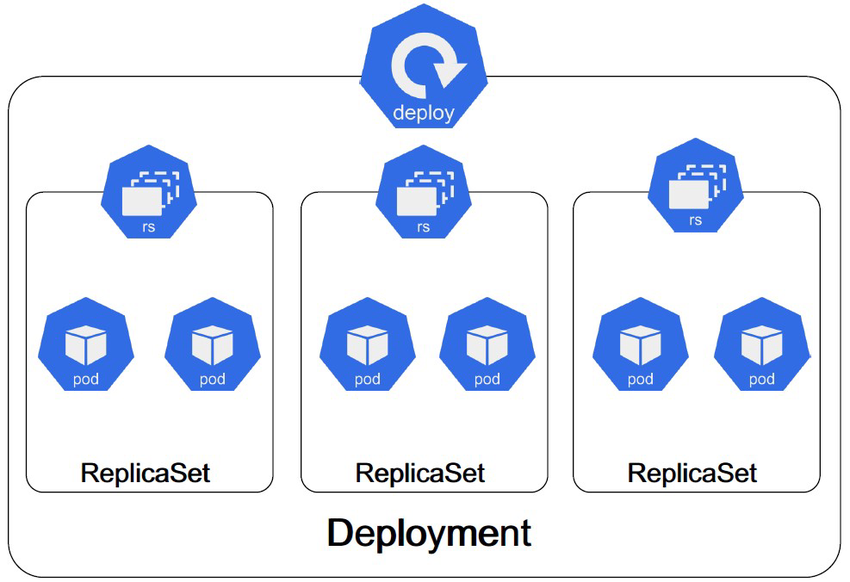

ReplicaSets allow you to run a specified number of Pods by replicating them (managed by the scheduler). They are generally not managed manually, but instead managed by Deployments (explained below).

Deployment

Deployments allow you to manage Pods without delving deep into their details. You just specify the number of replicas (for Pods) you want in your Deployment, specify the containers and which images they should run and Kubernetes automatically handles the rest of it.

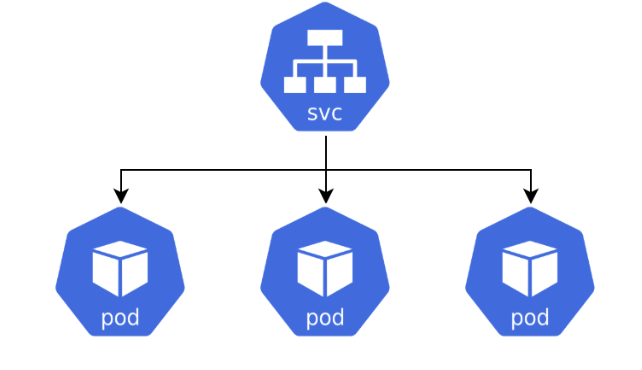

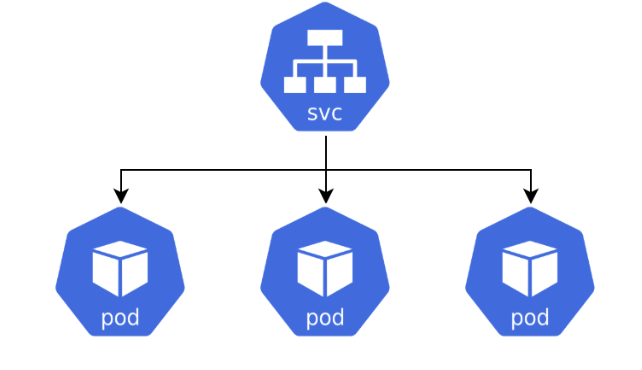

Service

Services expose your applications for networking. They provide entry points for them so you can directly access Services to reach your applications.

- For example, you could be running a Pod with a specific IP address. You want to send requests to this Pod and receive responses for it. So you use this IP address for handling connections. However, if this Pod is restarted for whatever reason (it could be failures or updates etc.), you now need to update this IP address in your code that handles the networking. When you consider this in the whole schema of a Kubernetes cluster, it becomes painful to manage all the networking. Also note that Deployments, DaemonSets and ReplicaSets manage Pods all the time and there is no guarantee that a Pod will last for a specified time.

To provide ease in this, Services provide an easy way to access your Pods and containers. They automatically manage the connections of Pods so you can directly access them through the Service. It provides an abstraction for networking.

Endpoints

Endpoints are the internals of how Services handle networking. They contain the information on which IP addresses it should route the traffic it received including the target port. You can think of these as the inner workings of the Service abstraction. Here is an example:

Name: "mysvc",

Subsets: [

{

Addresses: [{"ip": "10.10.1.1"}, {"ip": "10.10.2.2"}],

Ports: [{"name": "a", "port": 8675}, {"name": "b", "port": 309}]

},

{

Addresses: [{"ip": "10.10.3.3"}],

Ports: [{"name": "a", "port": 93}, {"name": "b", "port": 76}]

},

]

PersistentVolume

Since everything is managed by Kubernetes dynamically, if a Pod dies, its data dies with it as well. Creating PersistentVolumes prevents this as it instructs Kubernetes to reserve specific amounts of storage for specific read/write modes and to preserve it.

PersistentVolumeClaim

PersistentVolumeClaims allows you to request specific amounts of storage for specific read/write modes from a PersistentVolume so that you can attach it to your Pods.

ConfigMap

ConfigMaps allow you to specify non-confidential environment variables to your Pods. This becomes helpful in cases when you want to update your environment variables without re-deploying your Pods.

Secret

Secrets are similar to ConfigMaps but the difference is that it is used to store confidential information such as API keys and passwords.

StatefulSet

StatefulSets are similar to Deployments in the sense that they also manage Pods. However, whereas the Pods managed by a Deployment are stateless and interchangeable, the Pods managed by StatefulSets are not. The Pods managed by a StatefulSet are ordered and each of them is unique. This allows you to set whatever context/state to whichever Pod you want and distinguish them by this.

Job

Jobs allow you to run Pods that run an application until x number of them get successful in doing so. Until that number is reached, the Job will retry creating and running the Pods. It also records all of the successful executions.

CronJob

CronJobs are similar to Jobs except that they run regularly. It runs Jobs in the cron schedule specified in its yaml format.

Conclusion

There you go! Now you have an overview of Kubernetes and the components inside of it. You know what the purpose of Kubernetes is, what it does, and how it achieves it. If you want to learn more about Kubernetes, you can follow our Kubernetes 101 blog post series or check the docs here.

In the next post, we will learn more about the yaml files that are used to manage Kubernetes clusters and we will have a chance to apply what we’ve learned in this post. Stay tuned!

References

Share on social media: